Gluster Peer Probe Failed Error Through Rpc Layer Retry Again Later

Vagrant cachier is a very useful plugin for Vagrant users. It helps to reduce fourth dimension and the corporeality of packages go downloaded from internet betwixt each "vagrant destroy".

For instance, you are using a CentOS vii epitome in Vagrant setup and want it to update with the latest packages every time you start working in the invitee and so the usual work menstruum is "vagrant upwardly" -> "vagrant ssh" > "sudo yum update -y" -> "Practise your stuff" -> "vagrant destroy" . Merely the amount of packages get downloaded during yum update and the time consumed for it is somehow undesirable .

vagrant-cachier keeps the downloaded packages in the file arrangement of the host machine and uses this for the guest as cache. The yum update in the guest gets the packages from the cache and the time and net usage is drastically reduced. Which is really cool!

I tried to install vagrant-cachier on my Fedora 23 laptop with KVM and libvirt and got in to below issue.

Upshot:

[root@dhcp35-203 ~]# vagrant plugin install vagrant-cachier Installing the 'vagrant-cachier' plugin. This can take a few minutes... Bundler, the underlying system Vagrant uses to install plugins, reported an fault. The error is shown below. These errors are normally acquired by misconfigured plugin installations or transient network problems. The fault from Bundler is: An fault occurred while installing cherry-libvirt (0.5.2), and Bundler cannot continue. Brand sure that `gem install crimson-libvirt -v '0.5.ii'` succeeds before bundling. Gem::Ext::BuildError: ERROR: Failed to build gem native extension. /usr/bin/ruby -r ./siteconf20151027-20676-13hfub7.rb extconf.rb *** extconf.rb failed *** Could not create Makefile due to some reason, probably lack of necessary libraries and/or headers. Cheque the mkmf.log file for more details. You may need configuration options. xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx extconf.rb:73:in `<principal>': libvirt library non found in default locations (RuntimeError) extconf failed, exit code 1 Gem files will remain installed in /root/.vagrant.d/gems/gems/cerise-libvirt-0.5.2 for inspection. Results logged to /root/.vagrant.d/gems/extensions/x86_64-linux/ruby-libvirt-0.5.two/gem_make.out

Subsequently installing "libvirt-devel" packet the event got resolved.

[root@dhcp35-203 ~]# dnf install libvirt-devel [root@dhcp35-203 ~]# vagrant plugin install vagrant-cachier Installing the 'vagrant-cachier' plugin. This tin can accept a few minutes... Installed the plugin 'vagrant-cachier (1.ii.1)'!

Withal the vagrant upwards command once more failed.

$ vagrant init centos/seven

Then nosotros need to modify the vagrantfile every bit vagrant-cachier past-default uses NFS to mount the host filesystem in to the guest.

$ cat Vagrantfile Vagrant.configure(2) exercise |config| config.vm.box = "centos/seven" if Vagrant.has_plugin?("vagrant-cachier") config.cache.scope = :box config.cache.synced_folder_opts = { type: :nfs, mount_options: ['rw', 'vers=3', 'tcp', 'nolock'] } terminate terminate Adjacent step was

$ vagrant up xxxxxxxxxxxxxxxxxxxx The following SSH command responded with a non-aught exit condition. Vagrant assumes that this means the command failed! mount -o 'rw,vers=3,tcp,nolock' 192.168.121.1:'/domicile/lmohanty/.vagrant.d/cache/fedora/23-cloud-base' /tmp/vagrant-cache Stdout from the control: Stderr from the command: mount.nfs: Connexion timed out

After trivial troubleshooting it turned out to be a firewall i.due east. iptable issue. iptable was blocking the nfs service of host for the performance. As a temporary workaround I removed all the iptable rules from the host.

$ iptables -F

After that "vagrant up" worked fine and I tin run across the changes vagrant-cachier did in the guest to make the caching work.

Hither are the things washed by vagrant-cachier for the caching to work.

- Mounts the ~/.vagrant.d/enshroud/<guest-name> from host in the guest on /tmp/vagrant-cache/

- In Invitee

- Information technology enables the yum caching i.east. sed -i 'due south/keepcache=0/keepcache=1/g' /etc/yum.conf

- Information technology creates a symlink of /tmp/vagrant-cache/yum to /var/cache/yum

vagrant@localhost ~]$ ls -l /var/enshroud total 8 drwx------. 2 root root 4096 Nov xv 00:08 ldconfig drwxr-xr-x. 2 root root 4096 Jun ix 2022 man lrwxrwxrwx. 1 root root 22 Nov 15 00:06 yum -> /tmp/vagrant-cache/yum

vagrant-cachier works fine with CentOS7 guests. However I plant an event with Fedora 23 guests as the default package director is dnf instead of yum. I accept filed an issue with vagrant-cachier and too working on a fix.

Greetings,

Nosotros accept great pleasance in inviting y'all to FUDCon APAC 2015, to be held at MIT COE, Pune from 26 to 28 June 2015.

What is FUDCon?

FUDCon is the Fedora User'southward and Developer'south Briefing, a major gratuitous software event held in various regions effectually the world, annually one per region.

The 2022 APAC edition of FUDCon will be held in Pune.

At FUDCon, you lot get to-

- Attend sessions on technology introductions and deep dives.

- Participate in hands-on workshops and sessions where agreeing people become together to discuss a project/technology.

- Be a office of Hack-fests where contributors work on specific initiatives, ranging from packaging, writing features to UI mock-ups.

- 'Un-Briefing' at Bar Camps, where people interested in a wide range of issues/topics can come together to share and learn.

The speakers (that could be you) will pitch the topics they are interested in discussing in the morning of the consequence.

Anyone eager to heed in or participate can attend the session at the allotted time slot.

The most heady office hither is that everyone can stand up and talk in front end of the big audience and share their views on the topic of their interest.

Why FUDCon?

FUDCon has always been a high quality event where Fedora contributors from dissimilar parts of the earth converge together to bounciness ideas off each other.

Fedora has the best people, professionals and developers working for it and they all interact on events like FUDCon. We have delegates flying in from far places to come and talk to our audience near Fedora, the time to come of Fedora (which is the upstream of a premier Enterprise Operating system).

Thus, FUDCon would be a great opportunity for you to engage with some of the all-time minds in the industry, update yourselves on the latest and the cutting edge technological innovations and experience the excitement and the thrill of the open up source environment.

For more than data, visit our website – fudcon.in

Looking forward to seeing you there.

For any help contact: Rupali Talwatkar at rtalwatk@redhat.com

Reference : https://fedoraproject.org/wiki/Fudcon/Invitation_Letter_College

FUDCon is the Fedora Users and Developers Conference, a major free software event. It is an annual event per region (due east.yard. APAC). This yr FUDCon APAC is happening at Pune, India From 26th June to 28th June 2015. For details check out the FUDCon Republic of india habitation page.

FUDCon is always free to attend for anyone in the world. If you are looking for open source and free software contributors/developers, acquire latest/new technologies, learn how to contribute to Fedora and other upstream projects, want to hangout and hack on interesting stuff , it is one of the finest identify to be present.

This twelvemonth we are having a Linux container track in FUDCon for full day. The idea of a split track came because of the more than number of talks/workshops proposals submitted related to containers and high interest from community nigh learning Linux containers e.g. Docker related technologies.

To starting time with, we have a talk virtually "Running Project Atomic and Docker on Fedora" in the chief track past me and Aditya on Sat, 27th June 2015. And the container rail is on next day i.due east. Sunday 28th June 2015.

Here are the listing of talks and workshops planned for the container runway.

- Docker Basics Workshop

- Fedora Atomic Workshop

- Hands-On Kubernetes

- Orchestration of Docker containers using Openshift v3 and Kubernetes

Note : The topics of the container rails has changed from the time I had published the blog. Then the I have updated the above listing.

For the workshops, you need to deport your laptop. We are planning provide preconfigured virtual car images for KVM and Virtual box. As well planning to distribute the images using use usb sticks/pollex drives. And then if you have usb/thumb drive, delight bear them with y'all as it will help you/others to quickly set-up their environments.

And then if you are interested to learn about Linux containers or hack around Linux containers, Docker, come up join us on container track in FUDCon 2015, Pune.

Recently I started working on creating a Vagrant box[1] for Atomic Developer Parcel projection. For testing the Vagrant I wanted to use CentOS 7 with the KVM + Vagrant.

However yum install for Vagrant packages failed in CentOS as Vagrant packages are not available in CentOS7. However these are available through CentOS software drove SIG

Cheers to SCL(softwarecollections.org) customs for making the Vagrant packages bachelor.

So here are the steps that will install Vagrant with libvirt provider in CentOS7 motorcar.

$ sudo yum -y install centos-release-scl $ sudo yum -y install sclo-vagrant1 qemu-kvm $ sudo scl enable sclo-vagrant1 bash # Start libvirtd $ sudo systemctl start libvirtd # Permanently enable libvirtd $ sudo systemctl enable libvirtd

Refer documentation [2] of Vagrant project for more details.

[one] https://github.com/projectatomic/adb-atomic-developer-bundle

[two] https://www.vagrantup.com/

[two] https://docs.vagrantup.com/v2/

Comments/Suggestions are welcome!

Information technology was a wonderful experience attending Devconf 2022 in Brno, Czech Commonwealth. The conference hosted some really interesting talks.

Talks I attended

- Keynote:The Future of Cerise Hat

- What is kubernetes?

- Docker Security

- Fedora Atomic

- Performance tuning of Docker and RHEL Atomic

- GlusterFS- Compages and Roadmap

- golang- the Skilful, the bad and the ugly

- Vagrant for your Fedora

- CI Provisioner – slave and test resources in Openstack, Beaker, Foreman, and Docker through one mechanism

- Effective Beaker(workshop)

- Project Atomic discussions (not listed in the schedule merely interested folks got together)

- Running a CentOS SIG (My talk)

- CentOS: A platform for your success

- Super Privileged Containers (SPC)

- Ceph FS development update

Apart from this I had attended talks but were not useful to me for various reasons. Hence did not list them.

Most of the talks were alive streamed and recorded. Yous can find them at Cherry-red Lid Czech'due south youtube aqueduct i.east. https://www.youtube.com/user/RedHatCzech

Because of time constraints I missed some of the expert talks as well e.g. systemd and open shift talks.

Too considering of Devconf, at present I can put faces to may irc nicknames and electronic mail address. It was a dainty experience meet people physically with whom I had only communicated through irc and emails.

A lot of cheers to the organisers, speakers and participants for the success of Devconf 2015. Looking forward to the next Devconf.

The first CentOS Dojo in India took place in Bangalore on 15th November(Sat) 2022 at Red Hat Bangalore part. Red Chapeau had sponsored the event.

I was a co-organizer of the Dojo along with Dominic and Karanbir Singh. Around ninety people RSVPed for the upshot but around xl (mostly arrangement administrators and new users) attended the result.

The First talk was by Aditya Patawari on "An introduction to Docker and Projection Atomic". The talk included a demo and introduced audience to docker and Diminutive host. Most of the attendees had questions on docker every bit they had used or take heard nearly information technology. There were some questions about differences betwixt CoreOS and Projection Atomic. The slides are bachelor at http://www.slideshare.internet/AdityaPatawari/docker-centosdojo. Overall this talk gave fair idea about Docker and Atomic project.

2nd talk was "Be Secure with SELinux Gyan" by Rejy M Cyriac. This session near troubleshooting SELinux bug and introduction to creating custom SELinux policy modules. Rejy fabricated the talk interesting past distributing SELinux stickers to attendees who asked interesting questions or answered questions. Slides can be found here.

After these two talks nosotros took a lunch break for around 1 hour. During the tiffin break we distributed the CentOS t shirts and got a chance to socialize with the attendees.

The first session post launch was "Scale out storage on CentOS using GlusterFS" past Raghavendra Talur. The talk introduced the audience to GlusterFS, of import high level concepts and a demo was shown using packages from CentOS storage SIG. Slides can be found at slideshare.

The next session was "Network Debugging" by Jijesh Kalliyat. This talk covered all near all basic concepts/primal, network Diagnostic tools required to troubleshoot a network issue. Also it included a demo of use Wireshark and Tcpdump to debug network bug. Slides are available hither.

Earlier the next talk, nosotros took break for some time and clicked some group pictures of all nowadays for the Dojo.

The terminal session was on "Systemd on CentOS" by Saifi Khan. The talk covered a lot of areas e.one thousand. comparing between SysVinit and systemd, Concurrency at calibration, how systemd is more scalable than other available init systems, some similarity of design principles with CoreOS and how information technology is suited better for Linux container technology. Saifi also talked well-nigh how systemd has saved his organization from being unusable. His liking for systemd was quite evident from the talk and enthusiasm.

Overall information technology was an awesome experience participating in the Dojo as it covered wide multifariousness of topics which are of import for deploying CentOS for diverse purposes.

Bangalore Dojo link: http://wiki.centos.org/Events/Dojo/Bangalore2014

Group Photo. You can see happy faces there 🙂

Bangalore Dojo, 2014

RPMs for iii.iv.6beta1 are bachelor for testing at http://download.gluster.org/pub/gluster/glusterfs/qa-releases/3.4.6beta1/

In a higher place repo contains RPMs for CentOS/ EL 5, 6, 7 and Fedora 19, 20, 21, 22. Packages for other platforms/distributions volition be available one time they are build.

If you find any outcome with the RPMs please study them to gluster community @ http://www.gluster.org/community/index.html

Here are the topics this web log is going to embrace.

- Samba Server

- Samba VFS

- Libgfapi

- GlusterFS VFS plugin for Samba and libgfapi

- Without GlusterFS VFS plugin

- FUSE mount vs VFS plugin

About Samba Server:

Samba server runs on Unix and Linux/GNU operating systems. Windows clients can talk to Linux/GNU/Unix systems through Samba server. Information technology provides the interoperability between Windows and Linux/Unix systems. Initially it was created to provide printer sharing and file sharing mechanisms between Unix/Linux and Windows. As of now Samba project is doing much more than just file and printer sharing.

Samba server works as a semantic translation engine/machine. Windows clients talk in Windows syntax e.1000. SMB protocol. And Unix/Linux/GNU file-systems understand requests in POSIX. Samba converts Windows syntax to *nix/GNU syntax and vice versa.

This article is virtually Samba integration with GlusterFS. For specific details I have taken example of GlusterFS deployed on Linux/GNU.

If you take never heard of Samba project before, y'all should read well-nigh it more than , before going further in to this web log.

Hither are important link/pointers for further written report:

- what is Samba?

- Samba introduction

Samba VFS:

Samba lawmaking is very modular in nature. Samba VFS code is divided in to two parts i.e. Samba VFS layer and VFS modules.

The purpose of Samba VFS layer is to act as an interface between Samba server and beneath layers. When Samba server become requests from Windows clients through SMB protocol requests, it passes it to Samba VFS modules.

Samba VFS modules i.e. plugin is a shared library (.then) and it implements some or all functions which Samba VFS layer i.e. interface makes available. Samba VFS modules can be stacked on each other(if they are designed to be stacked).

For more about Samba VFS layer, please refer http://unix4.com/w/writing-a-samba-vfs-richard-sharpe-2-oct-2011-e6438-pdf.pdf

Samba VFS layer passes the request to VFS modules. If the Samba share is done for a native Linux/Unix file-system, the telephone call goes to default VFS module. The default VFS module forward call to System layer i.due east. operating system. For User space file-system like GlusterFS, VFS layer calls are implemented through a VFS module i.e. VFS plugin for GlusterFS .The plugin redirects the requests (i.due east fops) to GlusterFS APIs i.e. libgfapi. It implements or maps all VFS layer calls using libgfapi.

libgfapi:

libgfapi (i.east. glusterfs api) is ready of APIs which can directly talk to GlusterFS. Libgfapi is some other access method for GlusterFS like NFS, SMB and FUSE. Libgfapi bindings are available for C, Python, Become and more programming languages. Applications tin can exist developed which can directly use GlusterFS without a GlusterFS volume mount.

GlusterFS VFS plugin for Samba and libgfapi:

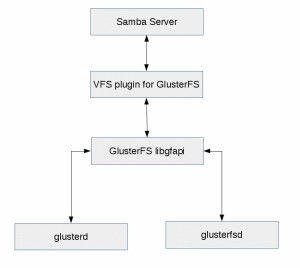

Here is the schematic diagram of how communication works betwixt different layers.

Samba Server: This represents Samba Server and Samba VFS layer

VFS plugin for GlusterFS: This implements or maps relevant VFS layer fops to libgfapi calls.

glusterd: Management daemon of Glusterfs node i.e. server.

glusterfsd: Brick process of Glusterfs node i.due east. server.

The client requests come to Samba server and Samba servers redirects the calls to GlusterFS's VFS plugin through Samba VFS layer. VFS plugin calls relevant libgfapi fucntions. Libgfapi acts as a customer, contacts glusterd for vol file data ( i.eastward. information most gluster book, translators, involved nodes) , then forward requests to advisable glusterfsd i.eastward. brick processes where requests actually get serviced.

If you desire to know specifics near the setup to share GlusterFS's volume through Samba VFS plugin, refer below link.

https://lalatendumohanty.wordpress.com/2014/02/11/using-glusterfs-with-samba-and-samba-vfs-plugin-for-glusterfs-on-fedora-twenty/

Without GlusterFS VFS plugin:

Without GlusterFS VFS plugin, we can nonetheless share GlusterFS book through Samba server. This can be done through native glusterfs mount i.e. FUSE (file system in user infinite). We demand to mount the volume using FUSE i.east .glusterfs native mount in the aforementioned machine where Samba server is running, and so share the mount point using Samba server. Every bit we are not using the VFS plugin for GlusterFS here, Samba will treat the mounted GlusterFS volume as a native file-system. The default VFS module will exist used and the file-system calls will exist sent to operating system. The menstruation is same equally whatever native file system shared through Samba.

FUSE mount vs VFS plugin:

If you are not familiar with file systems in user space, delight read near FUSE i.e. file system in user space.

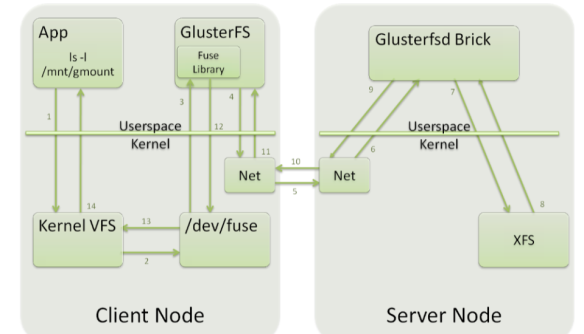

For FUSE mounts, file organization fops from Samba server goes to user space FUSE mount point -> Kernel VFS -> /dev/fuse -> GlusterFS and comes dorsum in the same path. Refer to below diagrams for details. Consider Samba server as an application which runs on the fuse mount point.

Fuse mount compages for GlusterFS

You can observe the process context switches happens between user and kernel space in above architecture. It is going to be a key differentiation factor when compared with libgfapi based VFS plugin.

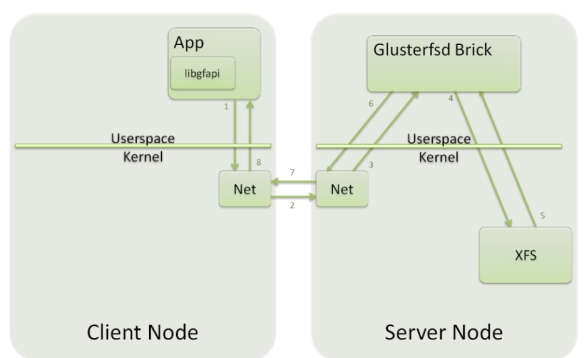

For Samba VFS plugin implementation, see the beneath diagram. With the plugin Samba calls get converted to libgfapi calls and libgfapi forward the requests to GlusterFS.

Libgfapi architecture for GlusterFS

The above pictures are copied from this presentation:

Reward of libgfapi based Samba plugin Vs FUSE mount:

- With libgfapi , there are no kernel VFS layer context switches. This results in operation benefits compared to FUSE mountain.

- With a divide Samba VFS module i.east. plugin , features ( due east.thou: more than NTFS functionality) can be provided in GlusterFS and information technology tin can exist supported with Samba, which native Linux file systems do not support.

GlusterFS CLI code follows customer-server architecture, we should keep that listen while trying understand the CLI framework i.east. "glusterd" acts as the server and gluster binary (i.due east. /usr/sbin/gluster) acts equally the client.

In this write up I have taken "gluster volume create" command and will provide code snippets, gdb back traces and Wireshark network traces .

- All role calls start when "gluster volume create <volume-proper name> <brick1> <brick2>" is entered on the command line.

- "gluster" i.e. main() in cli.c process the command line input and sends it to glusterd with relevent callback function data as mentioned beneath.

- To be specific, gf_cli_create_volume() in cli/src/cli-rpc-ops.c sends the request to glusterd with call up office information i.e. gf_cli_create_volume_cbk(). For more information expect at the below code snippet and also the gdb back trace i.eastward. #bt_1 (check #bt_1 beneath)

- ret = cli_to_glusterd (&req, frame, gf_cli_create_volume_cbk, (xdrproc_t) xdr_gf_cli_req, dict, GLUSTER_CLI_CREATE_VOLUME, this, cli_rpc_prog, Zip);

- CLI contacts glusterd on localhost:24007 every bit glusterd's direction port is 24007 for TCP.

- glusterd uses the string passed in the higher up call dorsum i.e. "GLUSTER_CLI_CREATE_VOLUME" to find out the relevant function call and so that it can take the execution ahead.

- Once the data is sent to glusterd, client just waits for the reply from it. The wait happens in "event_dispatch (ctx->event_pool);" function main():cli.c. #bt_2

There are some other important functions (in the client side in the cli framework) to checkout. If y'all are debugging any cli issue, there is loftier probability you will come across these functions. Below are those functions

- cli_cmd_volume_create_cbk() :

- Phone call to gf_cli_create_volume() goes from hither and the mechanism to find gf_cli_create_volume() is petty dissimilar here.

- Cheque the construction of rpc_clnt_procedure_t and use of it in cli_cmd_volume_create_cbk() i.eastward. "proc = &cli_rpc_prog->proctable[GLUSTER_CLI_CREATE_VOLUME];"

- parse_cmdline() and cli_opt_parse() : These functions parse the command line input

GDB backtraces of function calls involved in the customer side of the framework:

#bt_1:

Breakpoint 1, gf_cli_create_volume (frame=0x664544, this=0x3b9ac83600, data=0x679e48) at cli-rpc-ops.c:3240

3240 gf_cli_req req = {{0,}};

Missing separate debuginfos, utilise: debuginfo-install glibc-2.12-ane.107.el6_4.5.x86_64 keyutils-libs-1.4-4.el6.x86_64 krb5-libs-one.ten.iii-x.el6_4.6.x86_64 libcom_err-1.41.12-xiv.el6_4.4.x86_64 libselinux-2.0.94-5.iii.el6_4.ane.x86_64 libxml2-two.seven.6-12.el6_4.1.x86_64 ncurses-libs-5.7-3.20090208.el6.x86_64 openssl-1.0.0-27.el6_4.2.x86_64 readline-6.0-4.el6.x86_64 zlib-one.2.3-29.el6.x86_64

(gdb) bt

#0 gf_cli_create_volume (frame=0x664544, this=0x3b9ac83600, data=0x679e48) at cli-rpc-ops.c:3240

##1 0x0000000000411587 in cli_cmd_volume_create_cbk (land=0x7fffffffe270, discussion=<value optimized out>, words=<value optimized out>,

# wordcount=<value optimized out>) at cli-cmd-volume.c:410

# #2 0x000000000040aa8b in cli_cmd_process (country=0x7fffffffe270, argc=5, argv=0x7fffffffe460) at cli-cmd.c:140

# #3 0x000000000040a510 in cli_batch (d=<value optimized out>) at input.c:34

# #4 0x0000003b99a07851 in start_thread () from /lib64/libpthread.and then.0

# #v 0x0000003b996e894d in clone () from /lib64/libc.so.six

#bt_2:

#0 gf_cli_create_volume_cbk (req=0x68a57c, iov=0x68a5bc, count=1, myframe=0x664544) at cli-rpc-ops.c:762

#i 0x0000003b9b20dd85 in rpc_clnt_handle_reply (clnt=0x68a390, pollin=0x6a3f20) at rpc-clnt.c:772

#2 0x0000003b9b20f327 in rpc_clnt_notify (trans=<value optimized out>, mydata=0x68a3c0, event=<value optimized out>, data=<value optimized out>) at rpc-clnt.c:905

#iii 0x0000003b9b20ab78 in rpc_transport_notify (this=<value optimized out>, event=<value optimized out>, data=<value optimized out>) at rpc-ship.c:512

#four 0x00007ffff711fd86 in socket_event_poll_in (this=0x6939b0) at socket.c:2119

#5 0x00007ffff712169d in socket_event_handler (fd=<value optimized out>, idx=<value optimized out>, data=0x6939b0, poll_in=one, poll_out=0, poll_err=0) at socket.c:2229

#6 0x0000003b9aa62327 in event_dispatch_epoll_handler (event_pool=0x662710) at result-epoll.c:384

#seven event_dispatch_epoll (event_pool=0x662710) at event-epoll.c:445

#eight 0x0000000000409891 in main (argc=<value optimized out>, argv=<value optimized out>) at cli.c:666

Code flow for "volume create" command in "glusterd" i.e. server side of cli framework

- Equally mentioned in the cli client's code flow, string "GLUSTER_CLI_CREATE_VOLUME" helps glusterd to find out the relevant function for the command . To see how information technology is done bank check structure gd_svc_cli_actors in glusterd-handler.c . I take also copied a pocket-size snippet of it.

- rpcsvc_actor_t gd_svc_cli_actors[ ] = { [GLUSTER_CLI_PROBE] = { "CLI_PROBE", GLUSTER_CLI_PROBE, glusterd_handle_cli_probe, NULL, 0, DRC_NA}, [GLUSTER_CLI_CREATE_VOLUME] = { "CLI_CREATE_VOLUME", GLUSTER_CLI_CREATE_VOLUME, glusterd_handle_create_volume, Zippo, 0, DRC_NA},

- Hence the call goes like this glusterd_handle_create_volume-> __glusterd_handle_create_volume

- In __glusterd_handle_create_volume() all required validations are done e.grand.: if the book with aforementioned proper noun already exists or brick is on a separate partition or root division, number of bricks. The gfid* for the volume is too generated here.

- Some other important role is gd_sync_task_begin(). May be I will go to details of this function in future write-upwardly. Because as of now I dont understand it completely.

- One time glusterd creates the volume , it sends the data back to the cli customer. This happens in glusterd-rpc-ops.c:glusterd_op_send_cli_response()

- glusterd_to_cli (req, cli_rsp, NULL, 0, Goose egg, xdrproc, ctx);

Network traces captured during the "create volume" control:

::ane -> ::one Gluster CLI 292 V2 CREATE_VOLUME Call

node1 -> node2 GlusterD Management 200 V2 CLUSTER_LOCK Phone call

node2->node1 GlusterD Direction 168 V2 CLUSTER_LOCK Reply (Call In

node1 -> node2 GlusterD Management 540 V2 STAGE_OP Phone call

node2 -> node1 GlusterD Direction 184 V2 STAGE_OP Reply

127.0.0.ane -> 127.0.0.1 GlusterFS Callback 112 [TCP Previous segment

127.0.0.1 -> 127.0.0.1 GlusterFS Handshake 168 V2 GETSPEC Call

127.0.0.1 -> 127.0.0.1 GlusterFS Callback 112 [TCP Previous segment

127.0.0.i -> 127.0.0.1 GlusterFS Handshake 160 V2 GETSPEC Call

node1 -> node2 GlusterFS Callback 112 V1 FETCHSPEC Telephone call

::ane -> ::1 GlusterFS Callback 132 V1 FETCHSPEC Telephone call

::ane -> ::1 GlusterFS Handshake 192 V2 GETSPEC Call

::1 -> ::ane GlusterFS Callback 132 [TCP Previous segment

::1 -> ::i GlusterFS Callback 132 [TCP Previous segment

::1 -> ::ane GlusterFS Callback 132 V1 FETCHSPEC Telephone call

node1 -> node2 GlusterD Management 540 V2 COMMIT_OP Call

127.0.0.1 -> 127.0.0.1 GlusterFS Handshake 984 V2 GETSPEC Reply

127.0.0.1 -> 127.0.0.1 GlusterFS Handshake 1140 V2 GETSPEC Reply

::1 -> ::1 GlusterFS Handshake 1436 V2 GETSPEC Respond

::1 -> ::1 GlusterFS Handshake 180 V2 GETSPEC Call

::1 -> ::one GlusterFS Handshake 1160 V2 GETSPEC Answer

::ane -> ::1 GlusterFS Handshake 188 V2 GETSPEC Call

::1 -> ::1 GlusterFS Handshake 1004 V2 GETSPEC Reply

node2 -> node1 GlusterFS Callback 112 V1 FETCHSPEC Telephone call

node2 -> node1 GlusterD Direction 184 V2 COMMIT_OP Answer

node1 -> node2 GlusterD Direction 200 V2 CLUSTER_UNLOCK Telephone call

node2 -> node1 GlusterD Management 168 V2 CLUSTER_UNLOCK Reply

::one -> ::1 Gluster CLI 464 V2 CREATE_VOLUME Respond

Below function calls happened during the book create equally seen in /var/log/glusterfs/etc-glusterfs-glusterd.vol.log. This has been collected after running glusterd in DEBUG mode.

[glusterd-volume-ops.c:69:__glusterd_handle_create_volume]

[glusterd-utils.c:412:glusterd_check_volume_exists]

[glusterd-utils.c:155:glusterd_lock]

[glusterd-utils.c:412:glusterd_check_volume_exists]

[glusterd-utils.c:597:glusterd_brickinfo_new]

[glusterd-utils.c:659:glusterd_brickinfo_new_from_brick]

[glusterd-utils.c:457:glusterd_volinfo_new]

[glusterd-utils.c:541:glusterd_volume_brickinfos_delete]

[shop.c:433:gf_store_handle_destroy]

[glusterd-utils.c:571:glusterd_volinfo_delete]

[glusterd-utils.c:597:glusterd_brickinfo_new]

[glusterd-utils.c:659:glusterd_brickinfo_new_from_brick]

[glusterd-utils.c:457:glusterd_volinfo_new]

[glusterd-utils.c:541:glusterd_volume_brickinfos_delete]

[store.c:433:gf_store_handle_destroy]

***************************************************

[glusterd-utils.c:5244:glusterd_hostname_to_uuid]

[glusterd-utils.c:878:glusterd_volume_brickinfo_get]

[glusterd-utils.c:887:glusterd_volume_brickinfo_get]

[glusterd-op-sm.c:4284:glusterd_op_commit_perform]

[glusterd-op-sm.c:3404:glusterd_op_modify_op_ctx]

[glusterd-rpc-ops.c:193:glusterd_op_send_cli_response]

[socket.c:492:__socket_rwv]

[socket.c:2235:socket_event_handler]

gfid*: it is an uuid number maintained past glusterfs. GlusterFS uses gfid extensively and a separate write-upward on gfid would be more justifiable.

I hope information technology will give some insights to anyone trying to understand GlusterFS cli framework.

This web log covers the steps and implementation details to use GlusterFS Samba VFS plugin.

Please refer beneath link, If you lot are looking for architectural data for GlusterFS Samba VFS plugin, difference between FUSE mount vs Samba VFS plugin

https://lalatendumohanty.wordpress.com/2014/04/xx/glusterfs-vfs-plugin-for-samba/

I take setup two node GlusterFS cluster with Fedora 20 (minimal install) VMs. Each VM has 3 split up XFS partitions with each partitions 100GB each.

I of the Gluster node is used as a Samba server in this setup.

I had originally tested this with Fedora xx. Merely this case should work fine with latest Fedoras i.east. F21 and F22

GlusterFS Version: glusterfs-3.four.2-1.fc20.x86_64

Samba version: samba-4.ane.3-2.fc20.x86_64

Post installation "df -h" command looked like beneath in the VMs

$df -h

Filesystem Size Used Avail Apply% Mounted on

/dev/mapper/fedora_dhcp159–242-root 50G ii.2G 45G 5% /

devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 2.0G 432K ii.0G 1% /run

tmpfs ii.0G 0 2.0G 0% /sys/fs/cgroup

tmpfs ii.0G 0 ii.0G 0% /tmp

/dev/vda1 477M 103M 345M 23% /kick

/dev/mapper/fedora_dhcp159–242-domicile 45G 52M 43G 1% /dwelling

/dev/mapper/gluster_vg1-gluster_lv1 100G 539M 100G 1% /gluster/brick1

/dev/mapper/gluster_vg2-gluster_lv2 100G 406M 100G ane% /gluster/brick2

/dev/mapper/gluster_vg3-gluster_lv3 100G 33M 100G one% /gluster/brick3

You can use following commands to create xfs partitions

1. pvcreate /dev/vdb

2. vgcreate VG_NAME /dev/vdb

3. lvcreate -due north LV_NAME -l 100%PVS VG_NAME /dev/vdb

iv. mkfs.xfs -i size=512 LV_PATH

Following are the steps and packages need to be performed/installed on each node (which is Fedora 20 for mine)

#Alter SELinux to either "permissive" or "disabled" way

# To put SELinux in permissive mode

$setenforce 0

#To see the current way of SELinux

$getenforce

SELinux policy rules for Gluster is present in recent Fedora releases e.g. F21, F22 or after. So SELinux should work fine with Gluster.

#Remove all iptable rules, so that it does not interfare with Gluster

$iptables -F

yum install glusterfs-server

yum install samba-vfs-glusterfs

yum install samba-client

#samba-vfs-glusterfs RPMs for CentOS, RHEL, Fedora19/18 are avialable at http://download.gluster.org/pub/gluster/glusterfs/samba/

#To start glusterd and car start it later on boot

$systemctl start glusterd

$systemctl enable glusterd

$systemctl status glusterd

#To outset smb and motorcar commencement it after boot

$systemctl start smb

$systemctl enable smb

$systemctl status smb

#Create gluster volume and outset it. (Running below commands from Server1_IP)

$gluster peer probe Server2_IP

$gluster peer status

Number of Peers: 1

Hostname: Server2_IP

Port: 24007

Uuid: aa6f71d9-0dfe-4261-a2cd-5f281632aaeb

Land: Peer in Cluster (Connected)

$gluster v create testvol Server2_IP:/gluster/brick1/testvol-b1 Server1_IP:/gluster/brick1/testvol-b2

$gluster 5 offset testvol

#Modify smb.conf for Samba share

$vi /etc/samba/smb.conf

#

[testvol]

comment = For samba share of volume testvol

path = /

read simply = No

guest ok = Yes

kernel share modes = No

vfs objects = glusterfs

glusterfs:loglevel = 7

glusterfs:logfile = /var/log/samba/glusterfs-testvol.log

glusterfs:volume = testvol

#For debug logs you can change the log levels to 10 e.one thousand: "glusterfs:loglevel = 10"

# Do non miss "kernel share modes = No" else you won't be able to write anything in to the share

#verify that your changes are correctly understood by Samba

$testparm -s

Load smb config files from /etc/samba/smb.conf

rlimit_max: increasing rlimit_max (1024) to minimum Windows limit (16384)

Processing section "[homes]"

Processing department "[printers]"

Processing section "[testvol]"

Loaded services file OK.

Server role: ROLE_STANDALONE

[global]

workgroup = MYGROUP

server string = Samba Server Version %v

log file = /var/log/samba/log.%m

max log size = l

idmap config * : backend = tdb

cups options = raw

[homes]

annotate = Habitation Directories

read only = No

browseable = No

[printers]

comment = All Printers

path = /var/spool/samba

printable = Yeah

impress ok = Yeah

browseable = No

[testvol]

comment = For samba share of volume testvol

path = /

read only = No

guest ok = Yeah

kernel share modes = No

vfs objects = glusterfs

glusterfs:loglevel = 10

glusterfs:logfile = /var/log/samba/glusterfs-testvol.log

glusterfs:volume = testvol

#Restart the Samba service. This not a compulsory footstep equally Samba takes latest smb.conf for new connections. Only to make sure information technology uses the latest smb.conf, restart the service.

$systemctl restart smb

#Set smbpasswd for root. This will exist used for mounting the volume/Samba share on the client

$smbpasswd -a root

#Mount the cifs share using following control and information technology is ready for utilize 🙂

mount -t cifs -o username=root,password=<smbpassword> //Server1_IP/testvol /mnt/cifs

GlusterFS volume tuning for volume shared through Samba:

- Gluster book needs to have: "gluster volume set up volname server.allow-insecure on"

- /etc/glusterfs/glusterd.vol of each of gluster node

add together "option rpc-auth-let-insecure on" - Restart glusterd of each node.

For setups where Samba server and Gluster nodes demand to be on different machines:

# put "glusterfs:volfile_server = <server proper noun/ip>" in the smb.conf settings for the specific volume

due east.g:

[testvol]

annotate = For samba share of book testvol

path = /

read only = No

guest ok = Yes

kernel share modes = No

vfs objects = glusterfs

glusterfs:loglevel = 7

glusterfs:logfile = /var/log/samba/glusterfs-testvol.log

glusterfs:volfile_server = <server name/ip>

glusterfs:volume = testvol

#Here are the packages that were installed on the nodes

rpm -qa | grep gluster

glusterfs-libs-3.iv.2-ane.fc20.x86_64

glusterfs-api-iii.4.2-1.fc20.x86_64

glusterfs-3.iv.2-1.fc20.x86_64

glusterfs-cli-3.four.two-1.fc20.x86_64

glusterfs-server-3.iv.ii-1.fc20.x86_64

samba-vfs-glusterfs-4.one.3-two.fc20.x86_64

glusterfs-devel-3.4.2-one.fc20.x86_64

glusterfs-fuse-3.4.2-1.fc20.x86_64

glusterfs-api-devel-3.four.2-1.fc20.x86_64

[root@dhcp159-242 ~]# rpm -qa | grep samba

samba-client-4.1.3-2.fc20.x86_64

samba-4.one.3-2.fc20.x86_64

samba-vfs-glusterfs-iv.1.iii-2.fc20.x86_64

samba-libs-4.1.iii-2.fc20.x86_64

samba-common-4.i.3-two.fc20.x86_64

Note: The same smb.conf entries should work with CentOS6 too.

sixteen.231569 74.531250

Source: https://lalatendu.org/page/2/

Post a Comment for "Gluster Peer Probe Failed Error Through Rpc Layer Retry Again Later"